Data is the key to Artificial Intelligence – “Garbage in, garbage out” has been a critical concept in computing since the 1980s and is especially valid when you make decisions based on data.

Marketing by technology vendors has fostered the idea that it was all a matter of getting the latest version of their products to ride the wave of CRM, Big Data later, and AI now. However, those who have already swallowed a lot of salty water know that Excel with good data and processes is better than a compelling platform with inconsistent data. A model based on simple tested and controlled statistical correlations is better than a sophisticated data science model with unverified data.

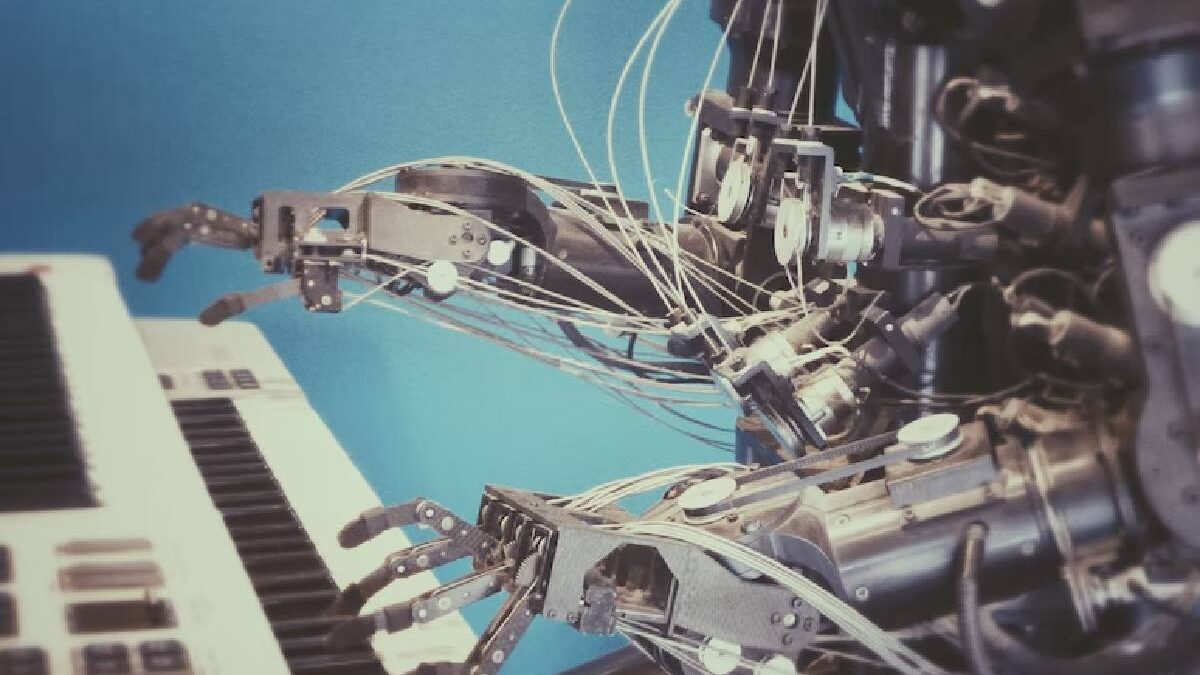

After all, machine learning, the most common AI tool, is nothing more than a data analysis method that automates model building by parsing data. His learning base is found in identifying patterns in the data, making predictions and making decisions with minimal human intervention.

The power of machine learning derives from the wealth of data with which it is fed. For this, your machine learning models must work with correct, unbiased, coherent data that allows their algorithm to learn. Peter Norvig, Director of Research at Google, stated: “It’s not that we have the best algorithms… we just have more data.”

Five steps to prepare your data for Machine learning processes:

- Cleaning and normalization of data so that it is valid, complete and consistent. To relate data from different environments, we must bring them into a standard format. My data model won’t do me good if the records are wrong. I will need to normalize them. This data plumbing work is vital, and at DataCentric, we have a lot of experience in data quality work before any merger process in large data environments. In many cases, it will require the assembly of a Master Data Management.

We cannot trust the information without having tested it. We need to perform tests on data to study if they give consistent values and to study unexpected results.

- Data connection platform (APIs). Once I have my clean data, I must relate the different data environments through the master data generated in the previous phase. In a Big Data environment, the data logic will usually be distributed, which leads to the need for APIs.

- Data enrichment. The quality of your sources defines your data. And only with internal data do you have a biased sample of data, which ignores everything you don’t know.

DataCentric, in the Spanish market, has more than 2,600 different variables, including sociodemographic, business, behavioural and financial information.

Finally, in the same way, that Artificial Intelligence needs clean data. Likewise, Big Data needs AI to analyze and interpret large volumes of unstructured data, such as images, logs, and videos. It is a symbiotic relationship; Big Data needs Artificial Intelligence systems to convert data into information, value and business for a company.